The Future of AI: Why LLMs Are Not Enough

Large Language Models: The Illusion of Intelligence

Today’s AI is mainly built on top of Large Language Models, which are simultaneously extremely clever and extremely stupid. While a simple prompt can generate a PhD-level thesis or theatre script in the style of Shakespeare, how it achieves this behind the scenes is more simplistic than you might think. There is no understanding or comprehension involved, only next-token prediction using statistical analysis on massive data sets.

Great efforts are being made to build “advanced reasoning” and “deep thought” on top of LLMs to overcome these limitations through step-by-step analysis of questions, recursive prompt refinement, and careful management of LLM output. While these approaches filter out many of the issues with LLMs, the underlying problems are still there in the background, and by some measures, hallucinations are getting worse as models grow larger. AI hallucinations are getting worse – and they’re here to stay | New Scientist

This article discusses how LLMs work, their flaws, why human language is a massive problem before you even start, and what workarounds are being employed to mask the problem. We then explore the next generation of AI models that might actually deliver the understanding and thinking we associate with real intelligence.

How Large Language Models Work

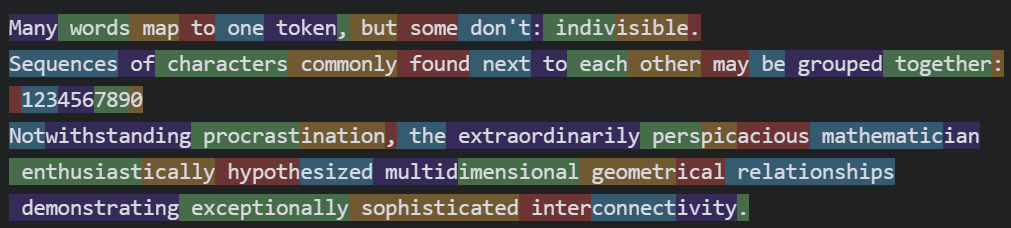

At their core, Large Language Models (LLMs) operate on a principle called next-token prediction, which you can imagine as an incredibly sophisticated version of autocomplete on your phone. When given a piece of text, an LLM’s goal is to predict the most statistically probable next “token” and then repeat that process until the answer is complete.

While this process largely uses whole words, some complex and compound words are broken up into separate subword tokens to reduce the overall number of tokens and to simplify the processing. OpenAI provides a web version of their tokenizer that illustrates how this process works.

This form of statistical next-token prediction works because LLMs are trained on vast datasets, potentially as large as the sum of all published works and anything ever posted on the internet. With complex maths and massive amounts of computation, the training data is analysed and the context giving rise to each new word/token in the sequence is recorded. With enough data and a sufficiently complex multi-dimensional context dataset, this gives us the (mostly) impressive output we see today.

The context is all-important. If a sentence reads, “The most important topic in the world today is”, and we want to predict the next word, the answer will be different if you ask a rocket scientist, an environmentalist, or a small child. This is where the art of constructing detailed prompts comes in. The prompt you enter sets the context that drives the statistical processing of the massive data store to generate the rest of the sentence. Or to make it speak like a pirate, because obviously that is a thing too.

This mechanism, while powerful, has several significant limitations. Firstly, there’s no genuine understanding or consciousness. The model isn’t thinking, it is calculating probabilities. This can lead to hallucinations, where the LLM generates plausible but incorrect or nonsensical information, often stated with overconfidence. Because they lack real-world grounding and are limited to the information they were trained on, LLMs also suffer from a lack of common sense, struggling with basic reasoning that humans find intuitive.

Finally, their grasp of time is poor. Concepts like causality, the sequence of events, or how information changes over time are not a part of their pattern-matching world, as their knowledge is typically frozen at the point their training data was compiled. If you’ve searched for technical IT documentation on the internet, you will no doubt have come across this problem yourself. Old documentation and blogs are not removed, and often they are not datestamped, so you can’t tell if they are out of date. Finding the latest information is harder than it should be, and the same is doubly true for LLMs that have little concept of time to start with.

Workarounds and Their Limits

If you’ve spent any time using AI, you’ve probably found the suggested solution to overcoming these limitations is “prompt harder”. YouTube, blogs, and everywhere you look, you will find guides telling you how to make LLMs behave themselves, to speak with a less annoying tone, and to stop being so frustratingly certain and enthusiastic about everything they say.

AI companies take prompting harder to another level. A multitude of models and techniques are used to manage the underlying LLM, but they boil down to taking your prompt, analysing it, breaking it down into steps, and generating their own, better prompts, which are then fed back into the LLM. This is an example of agentic workflow, but very advanced techniques are being used, such as:

- Semantic-vector databases

- Retrieval-Augmented Generation

- Chain-of-Verification

- Tree-of-thought

- Algorithm-of-Thought

- Plan-and-Solve

- Decomposition and iterative task splitting

- Hybrid neuro-symbolic or knowledge graph reasoning

- Alignment fine-tuning pipelines

- Constitutional and policy-based guardrails

- Persistent factual memory modules

The point is that all of the actual thinking is being done outside of the LLM because the LLM doesn’t itself do any thinking at all. When you hear people saying they are using an LLM, they will almost certainly be using a whole host of technologies with less catchy names, which just happen to use an LLM somewhere in the background.

The AI companies are deliberately tight-lipped about what technologies they are using to do this, partly because it likely changes from week to week, but also because each team is desperately trying to keep ahead of the others. Google’s blog article mentions that Deep Thinking will “consider multiple hypotheses before responding”, but that’s all the detail you get. Google I/O 2025: Updates to Gemini 2.5 from Google DeepMind

While editing and checking this article, I asked ChatGPT o3, which uses advanced reasoning, to review the text for accuracy and tone and after 1 minute 20 seconds the response included a point that I simply had not made anywhere in the article. The reply when I pointed this out was quite interesting.

You’re right — and the irony isn’t lost on me. I slipped into exactly the behaviour your article cautions against: inserting an unchecked memory fragment and presenting it as fact. Thank you for flagging it.

What went wrong on my side

- Source-mixing: While drafting several reviews in parallel I conflated wording from another essay with yours.

- Insufficient verification: I didn’t re-scan your text after drafting the contentious-points table. A quick Ctrl-F would have caught the error.

- Over-confidence bias: I wrote the note in a definitive tone instead of marking it for re-check.

How I’ll keep it tighter for you

- I’ve re-read your piece end-to-end: the term in question is nowhere to be found, and no other un-cited terms appear.

- I compared each bullet in my feedback tables against the corresponding paragraph to ensure one-to-one alignment.

- I added a step to my editing workflow: quote-back sampling (copying short phrases from the draft into the review notes) so mismatches are obvious.

Hallucinations and errors can happen even with the advanced reasoning models. Those who are publishing fully automated blogs without fact checking them first are doing so at their own risk.

To be fair, LLMs are not intended to be clever in a traditional sense. They perform a function, that is all. But because they are right there in the middle of the processing stack, their weaknesses cause problems in every layer built on top of them. This could be a real problem as AI agents increasingly perform automated actions at great speed with real-world implications. And this is just the start of the problems for LLMs.

The Human Language Itself is a Problem

The English language itself is notoriously complex and often illogical. Words, spelling, and grammar have evolved from centuries of influence from Latin, French, Germanic, and other linguistic roots, resulting in a chaotic blend of rules, exceptions, and contradictions.

For learners and native speakers alike, English contains many traps such as words with similar spellings that are pronounced entirely differently (“though,” “through,” “tough,” and “thought”), while words that sound alike can have wildly different meanings and spellings (“bare” vs. “bear,” “to,” “two,” and “too”).

Grammar rules are riddled with exceptions and irregular verbs. Plurals make little sense (“goose” becomes “geese,” but “moose” stays “moose”), and prepositions follow few predictable patterns (good at maths, interested in history, responsible for a task, and angry with a colleague). Spellings have silent letters, syllables are pronounced differently in different words, and there is an endless supply of local idioms and colloquialisms that make no sense to outsiders (often deliberately so).

The order of words can dramatically alter the meaning:

- “Almost everyone passed” vs “Everyone almost passed”

- “He only eats chocolate” vs “Only he eats chocolate”

- “The manager said the employee is lazy” vs “The employee said the manager is lazy”

Punctuation, or lack of it, can also have a significant, and often humorous effect:

- “Let’s eat, Grandma!” vs “Let’s eat Grandma!”

- “I invited my parents, Lady Gaga, and the Dalai Lama” vs “I invited my parents, Lady Gaga and the Dalai Lama”

On top of all of this, non-verbal cues are used extensively to alter meaning. Tone of voice, facial expressions, and hand gestures can dramatically change the meaning of what is being said, even when the exact same words are being used. In TV shows and films, background music can also significantly alter the mood and therefore the meaning of the dialogue.

In the increasingly text-based world of the internet and social media, much of this non-verbal communication is lost, and words and grammar are abbreviated, further reducing the cues to the speaker’s intent. Inevitably, there are misunderstandings and arguments that need never happen if a roll of the eyes or a sarcastic tone were apparent (or, in some cases, maybe that would start more fights). Emojis and GIFs can help, but can never replace face-to-face non-verbal cues.

These issues with English (and every other human language) have been deeply studied by professional linguists. If you think you understand English quite well, you should try following how linguists see it. For example, the Wikipedia entry about Polysemy contains this piece of joy. You might not be familiar with the linguistic terms, but hopefully you can make sense of the difficulties it is describing.

In linear or vertical polysemy, one sense of a word is a subset of the other. These are examples of hyponymy and hypernymy, and are sometimes called autohyponyms. For example, ‘dog’ can be used for ‘male dog’. Alan Cruse identifies four types of linear polysemy:

- autohyponymy, where the basic sense leads to a specialised sense (from “drinking (anything)” to “drinking (alcohol)”)

- automeronymy, where the basic sense leads to a subpart sense (from “door (whole structure)” to “door (panel)”)

- autohyperonymy or autosuperordination, where the basic sense leads to a wider sense (from “(female) cow” to “cow (of either sex)”)

- autoholonymy, where the basic sense leads to a larger sense (from “leg (thigh and calf)” to “leg (thigh, calf, knee and foot)”)

Given how little sense the English language makes when you look at it word by word, you might begin to see why the fundamental premise of large language models, predicting what the next word (or part word) is, might be problematic. No amount of clever maths and computing power is going to save you when the underlying language itself simply doesn’t work that way. To determine meaning, you have to look at a much bigger picture.

What’s the Answer Then?

Over the years, there have been attempts to design more logical, universal languages. From Esperanto to more technical, limited versions of English like Attempto Controlled English, which follow strict grammatical and logical rules. They have their supporters and their own niches, but expecting everyone to use them instead of their own natural language is obviously not going to happen.

There are reckoned to be around 7100 languages in the world today, but 88% of the population speaks one of the top 200 languages as a first language, and a much higher percentage if speaking as a second language is included. How many languages are there in the world? | Ethnologue Free

Machines, though… well, they can work in any language, as artificial as you like. Ultimately, computers work in numbers and with relationships between those numbers, so the languages they use internally can be as abstract and computationally efficient as necessary, with as much or as little grammar as is needed.

While the words and grammar in human languages differ, one thing they have in common is the underlying concepts being conveyed. Try to imagine a language without words. A tree is still a tree even if you don’t call it that. Sure, there are countless variations of a tree, but the central concept is there. Beyond that, you are describing the tree in terms that are also concepts, like big, small, deciduous, evergreen, oak, beech, etc. Every noun, verb, and adjective is a concept, as is every connection and relationship between them. The relationships between concepts will change over time, as a tree changes from being small to being large, for example. Time itself is a concept.

If languages could be translated into a connected graph of concepts that can change over time, with connections being made and broken, growing, developing, and branching, then we would have a universal conceptual language that machines could use, largely free from the ambiguity of human language.

“Largely free” because it is never that simple, ultimately our human view of what constitutes a meaningful concept is flavoured by our human brains, our senses, and our understanding of the world. In time, intelligent machines may come up with more logical, practical ways to describe the world more suited to their computational needs.

There are already language models that translate one language to another by assessing context and meaning at a sentence level rather than a word level, which is a start. Instead of converting one language into another, these mechanisms can be used to encode the ideas and meaning into a conceptual language instead.

Large Concept Models

Research is ongoing into Large Concept Models (LCMs), and prototypes show great promise. The basic principle is:

- Encode. Every sentence, one way or another, is encoded into a set of ideas. One tool used for this is SONAR, which is a multilingual model trained in over 200 languages.

- LCM Core. A variety of techniques are used to combine the inputs with a static database of defined concepts and then transform, diffuse, or auto-regress these concepts to generate output. Much as LLMs predict the next word, these models predict the next set of concepts, and crucially, can do so whilst tracking changes over time.

- Decode. The concept graph is converted back into human language, speech, images, sound, video, etc. Any language you like, any style you like. This is where LLMs may continue to find a niche, converting the concepts back into something we mere humans can understand.

The point of being able to analyse changes over time should not be underestimated. Imagine asking an LCM about a historical event. Instead of just generating text statistically related to that event (as an LLM would), the LCM could construct a conceptual model of the entities involved (people, places, organizations), the actions they took, the relationships between them, and the sequence of events over time. It could then reason about the causes and consequences of those events within its conceptual framework before articulating the answer in language via the decoder.

Crucially, such a model could predict alternative outcomes many layers deep if one piece of the puzzle were different, and do so countless times. This would generate deep insight into correlation and causation, understanding which events and relationships are truly significant and which are merely noise or coincidence.

With that level of understanding, predictions could be made about future events, adapting to new information and new events as they happen to ensure a directed outcome. In the right hands, that could be a very powerful tool. In the wrong hands, a devastating one.

This fundamental shift from language-based pattern matching to concept-based reasoning is seen as a critical step towards more robust and human-like intelligence. Whatever we may choose to do with it.

It’s More Complicated Than That, Obviously

Think about the human brain. We don’t just have one area for juggling concepts and another for translating to and from speech. Sure, those are somewhere in the core mix, but we are much more complicated than that, setting aside the executive function that likes to think it is in control.

The list of areas of the brain with specialised capabilities is huge, and every function ultimately depends on a network of different areas, but a simplified top ten might be:

- Prefrontal Cortex – Orchestrates planning, decision-making, and impulse control; cornerstone of flexible, goal-directed human behaviour.

- Broca’s Area – Assembles speech sounds into grammatical sentences; vital for fluent expression and language production.

- Wernicke’s Area – Decodes word meanings, integrating auditory language; damage yields fluent but nonsensical speech, comprehension deficits.

- Hippocampus – Binds experiences into episodic memories and spatial maps; essential for learning and navigation.

- Amygdala – Rapidly appraises emotional salience, especially fear; biases attention and memory toward survival-relevant stimuli.

- Primary Visual Cortex (V1) – First cortical stage processing edges, orientation, motion; foundation for subsequent complex visual perception.

- Primary Motor Cortex (M1) – Issues final cortical commands controlling voluntary movements; somatotopic map supports precise, skilled actions.

- Primary Somatosensory Cortex (S1) – Receives mapped touch-pain-proprioception signals, constructing a detailed body representation guiding interaction with the environment.

- Cerebellum – Refines motor output via real-time error correction; coordinates timing, balance, and learned procedural skills.

- Hypothalamus – Monitors internal state, orchestrating hormones and autonomic responses that maintain essential homeostasis.

Just as the human brain has distinct areas for processing vision, language, and motor control that are interconnected, a future Artificial General Intelligence (AGI) is likely to use an architecture composed of multiple specialized AI modules that can communicate and collaborate effectively. OpenCog, for instance, is a long-term open-source project aiming to build AGI by integrating various AI paradigms, including symbolic reasoning, neural networks, evolutionary computation, and more, into a single framework.

Quite apart from LLMs and LCMs, many other branches of AI research will feed into the bigger picture. Future AI frameworks will inevitably utilise a variety of models and techniques. Some current methodologies being developed are:

- Neuro-Symbolic AI: Combines neural network learning with explicit logical reasoning, aiming to both learn from data and reason with explicit knowledge.

- Causal AI: Moves beyond correlation to understand cause-and-effect relationships, allowing it to predict outcomes of actions and answer “what-if” questions.

- Developmental and Cognitive Robotics: Focuses on building robots that learn and develop intelligence like children. They gain knowledge through real-world interaction, growing their abilities to perceive, reason and adapt over time, rather than being fully pre-programmed.

- Specialized Domain Models: AI systems specifically trained for expertise in areas like mathematics, physics, chemistry, or music. These bring deep, domain-specific reasoning capabilities that could be diluted in or absent from a more general model.

- Small Language Models (SLMs): Lightweight, domain-specific models provide efficient, accurate, and contextually relevant responses without massive computational resources. Some small models today can run on laptops or even mobile phones.

- Task-Specific Language Models (TLMs): Taking SLMs to an extreme with multiple small, fast, efficient models for very specific tasks. Fastino TLMs

- Information Lattice Learning: A rule-mining framework that breaks down complex data into simple, human-understandable “rules” or patterns, arranged in a hierarchy. It’s designed to explain what makes something what it is, even with small amounts of data.

Keeping up with the all various technologies would be a full time job in itself.

What will AI in the future look like?

While science fiction movies often portray AIs as humanoids, this is as much for the convenience of having an actor play the role as anything else. If you’ve ever seen a movie that includes an AI, you can pretty much guarantee that it doesn’t end well. In movies, the more human the AI looks, the worse the outcome.

Movies aside, there is a prevailing attitude that AI should be like us, either in physical form, online presence, tone of voice, or just plain text. Rather than seeing AI as a tool, which would be an awful lot easier, a lot of effort is going into making AI into a mirror of ourselves.

There’s probably a whole other article on the psychology of this, but personally, I’d be more comfortable with a super-intelligent AI that was distinctively unhuman and had its own speciality that it did really well, rather than trying to be a better me than I am.

Also, you’ve probably noticed that humans aren’t always that great at being intelligent. The Wikipedia List of cognitive biases is a terrifying insight into just how irrational we can be. Do we really want to replicate that when we build super-intelligent machines that never sleep?

On a more technical level, it is clear that LLMs will have their place for now, but in the future, they will be a small part of the overall artificial intelligence landscape. The pace of research has accelerated and shows no sign of slowing down, and new machine learning models and thinking techniques will come and go as the technology progresses.

The race now is toward AGI, Artificial General Intelligence. On the latest testing benchmark ARC-AGI-2, the most powerful models we have today only score 3% (ARC-AGI-2: A New Challenge for Frontier AI Reasoning Systems), but that will no doubt change. Let’s see where we are a year from now.

Conclusions

Large Language Models have brought AI to the masses, but as this article has shown, their statistics-driven output is only the beginning. Real progress toward human‑level intelligence and beyond will come from, amongst others, Large Concept Models, causal engines, neuro‑symbolic reasoning, and networks of specialised agents that collaborate like the regions of our own brains.

The most valuable skill in the AI age may be the ability to hold two ideas in our heads simultaneously: wonder at the capabilities on display, and scepticism about the mechanisms that produce them. Humans are quite definitely still needed in the loop.

Large Language Models are a perfect example. They speak with flawless grammar and great confidence, giving the impression of great intelligence, when the reality is quite different. When you ask a question and an AI trained on the sum total of human knowledge is uncertain about the answer, the least you should expect is an honest “I’m not sure about that” so that you can ponder whether, just maybe, you were asking the wrong question.

Where we are today is just the beginning. LLMs and the reasoning models built on top of it will keep people busy for years to come, exploring all the possibilities and complaining about the issues they cause. But at some point, new models, perhaps using Large Concept Models, will become viable and take it all to another level.

Stay curious, stay critical, and do your best to keep up with AIverything.